Monday, August 30, 2010

Isotropic and anisotropic earth media in exploration geophysics

Sunday, August 29, 2010

More Crew Profiles

1.Name/Title

1.Name/Title-Nicky Applewhite/OS

2. Fave food/music/vacation destination

-Fried Chicken/R & B/Atlantic City

3. If you were stuck on a deserted island with only one person from this boat, who would you choose an

d why?

d why?-Dave DuBois (OBS) because he's a jokester

1.Name/Title

1.Name/Title-Rachel Widerman/3rd Mate

2. Fave food/music/constellation

Grilled Calamari/depends on my mood/Orion

3. If you were stuck on a deserted island with only one person from th

is boat, who would you choose and why?

is boat, who would you choose and why?-Hervin because he's gonna cook and bring his ipod

1.Name/Title

1.Name/Title-Hervin Fuller/Steward

2. Fave food/music/kitchen utensil

-Italian/Opera/12 inch knife

3. If you were stuck on a deserted island with only one person from this boat, who would you choose and why?

-Jason (Boson) because we like to hang out and we will be good at solving problems

-Jason (Boson) because we like to hang out and we will be good at solving problems 1.Name/Title

1.Name/Title-Mike Tatro/Acquisition Leader

2. Fave food/music/tool

-Steak/Country/Monkey Wrench

3. If you were stuck on a deserted island with only one person from this boat, who would you choose and why?

-Ca

rlos (Source Mechanic) because he's my fave

rlos (Source Mechanic) because he's my fave

Friday, August 27, 2010

Marine Multi-channel Seismic Processing (part-2)

With the raw shot data with geometry laoded, we are looking forward to seeing changes happen to make the data better till the final image of cross-section. The first thing we do is to use Ormsby bandpass filter to remove the noise generated during acquisition (ProMAX module: Bandpass Filter). Remember we have done the main frequency range analysis when we get raw shot data by using Interactive Spectral Analysis module. Take it and use it in bandpass filter. You can see big difference after employing this filter to the raw shot. The second thing we do is to edit the traces, including to kill bad channels (ProMAX module: Trace Kill/Reverse) and to remove the spikes and brusts (ProMAX module: Spike and Noise Edit). Remember we have known the bad channels using Trace Display when we just get the raw shot data in hand. So input the information in Trace Kill to get rid of those bad ones. All right, after these two steps, we have been able to see the difference from raw data. It is much better, isn't it?

But not good enough. The third thing we do in the pre-process is deconvolution. With the help of deconvolution, we could enhance the primaries and suppress the multiples (ProMAX module: Spking and Predictive Decon). Here, we need to test some critical parameters of the deconvolution, to figure out which ones create best results. It takes time! Please be patient, and read the related books and papers to understand how the deconvolution works and how it could work better.

(5) Velocity Analysis

After the pre-process flow, we have got better-look data in hand. It is time for us to do velocity analysis (ProMAX module: Velocity Analysis), which will take a lot time to complete. So be patient enough to get this step done. First of all, we start with large CDP interval, for example, 5000 CDP interval in a section of 60000 CDPs. When we conduct the velocity analysis, remember to use the near-trace plot that we have made before so that we could recognize the main horizons, and keep the direct wave, primaries and multiples paths in mind, so as to distinguish the primaries from multiples. Try our best to keep veolocity analysis away from multiples, and we know it is not always easy honestly.

Technically, we have stack velocity and interval velocity during velocity analysis, while stack one is lower than the interval one. However, we try our best to keep both of them increase reasonably with increasing depth. Because the seismic velocities of different layers or horizons will increase with depth in common cases due to the increase of some physical attributes like density. And the main factor for quality control during velocity analysis is to see the flat horizons after applying NMO (Normal Moveout). That is to say, if we pick the accurate velocity for the certain horizon, we are able to see the straight or flat coherent event in the trace gather. Sometimes we have some obvious coherent event to apply NMO to make sure the correct velocity we pick, especially in the upper layers, but sometimes not, especially in the lower layers. So in this unlucky situation, we would like to use the semblance graph to find out the energy concentration hotspot, and together with the increasing velocity with increasing depth in mind, to pick predictive velocities.

Again, be carefull of multiples. Because they will show up with hotspots in the semblance which might get you confused in some points, but the distinct thing is that they just have the same or similar velocity all the way down with the increasing depth, i.e., the multiples' velocity function curve should be a nearly straight line from the top down to the bottom. Anyway, try our best to be away from multiples during the whole process of velocity analysis. As long as we build up the brute velocity model with the large CDP interval, we can conduct the so-called brute stack. When we want to see more details for the strutures or something interesting, we need to denser the CDP interval for velocity analysis, for example, go for 2500 CDP, 1000 CDP interval, or even smaller CDP interval for some specific areas to image the relative smaller struture. So it depends on where is the interesting place we want to look at, how much details we want to see and what geolocial question we want to answer.

To Be Continued, see you next week, part 3!

Thursday, August 26, 2010

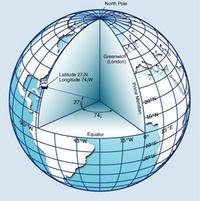

Give me the Earth, cut it up, and I'll give you a nautical mile!

e centre of the rise today. There has not been a lot to do since we started collecting multi channel seismic data. Maybe when we start recovering the OBSs I'll get to go up to the deck more often. We did sight a pod of whales today, though. This was a first for me. The whales were far off so I couldn't make them out very well. All I saw was the jet of water they made ever so often. Apart from this exciting event all I have been doing during my watch is looking at the monitors, recording OBS crossings and thinking about how long it'd take to get to the next site. I keep asking myself, "How long will it take before I can take my eyes off the paper I'm reading and record the next crossing? "

e centre of the rise today. There has not been a lot to do since we started collecting multi channel seismic data. Maybe when we start recovering the OBSs I'll get to go up to the deck more often. We did sight a pod of whales today, though. This was a first for me. The whales were far off so I couldn't make them out very well. All I saw was the jet of water they made ever so often. Apart from this exciting event all I have been doing during my watch is looking at the monitors, recording OBS crossings and thinking about how long it'd take to get to the next site. I keep asking myself, "How long will it take before I can take my eyes off the paper I'm reading and record the next crossing? "

I see now. The ship travels at 5 knots. The knot? A very convenient measure of "nautical"speed: 1 nautical mile per hour. Nautical, anything relating to navigation. We navigate on the seas. The Earth. The distances make sense. 1 nautical mile ~ 2 kilometers ~ 1.2 miles. I am thinking to myself. If I run at my average running pace - I do have a best time, but Nike plus tells me I run ~ 9' 30''/ mile - how long will it take me to run round the world? I do the math. There

are (360 * 60) nautical miles to run. Those miles give ~ ( 360 * 60 * 1.2) US miles. At my average running pace it'd take me ~ 237, 000 minutes. That's ~ 5 months and 12 days. I think I'll put off running round the world. Its a long night. I'm done with my watch and I want to return to bed, but I begin thinking about why we have to cut the earth into 360 parts. why 360 and 60? I am sure there are interesting reasons.

are (360 * 60) nautical miles to run. Those miles give ~ ( 360 * 60 * 1.2) US miles. At my average running pace it'd take me ~ 237, 000 minutes. That's ~ 5 months and 12 days. I think I'll put off running round the world. Its a long night. I'm done with my watch and I want to return to bed, but I begin thinking about why we have to cut the earth into 360 parts. why 360 and 60? I am sure there are interesting reasons.

Wednesday, August 25, 2010

A Brief History of Our Understanding of Planet Earth.

We know surprisingly little about our planet! The reason for this is that we are not able to probe the depths of Earth directly and explore. Also, because geological processes occur on much longer timescales than humans are familiar in dealing with. What little that we do know, we learned in the past couple decades! We learned how to split the atom before we learned how our own planet worked. Here I provide a brief history of our journey in understanding our home, planet Earth.

The age of Earth was of scientific speculation for many centuries. Finally, in 1953, Clair Patterson at the University of Chicago determined the now accepted age of Earth of 4,550 million (plus or minus 70 million) years old. He accomplished this through the Uranium/Lead dating of meteorites, which are the building blocks of planets. But once we discovered how old Earth was, a significant question came into the scientific forefront. If Earth is in fact ancient, then where were all the ancient rocks?

It took quite some time to answer this question. But it all started a few years earlier with Alfred Wegner, a German Meteorologist from the University of Marburg. Wegner developed a theory to explain geologic anomalies such as similar rocks and fossils being located on the East coast of the U.S. and the North West coast of Africa. His theory was that Earth’s continents had once been connected together, in a large landmass known as Pangea, and had since split apart into their contemporary locations. This theory opened up another question, what sort of force could cause the continents to move and plow through the Earth’s crust?

In 1944, Arthur Holmes, an English geologist, published his text Principles of Physical Geology in which he laid out his “Continental Drift” theory, which described how convection currents inside Earth could be the forcing behind the continent’s motion. Many members of the scientific community still could not accept this as a viable explanation for the movement of continents.

At the time, many thought that the seafloor of Earth’s oceans was young and mucky from all the sediment that was eroded off the continents and washed down river into the oceans. During the Second World War, a mineralogist from Princeton, Harry Hess, was on board the USS Cape Johnson. On board the Johnson there was a new depth sounder called the fathomer that was made to aid in shallow water maneuvering. Hess realized the scientific potential of this device and never turned it off. Hess surprisingly found that the sea floor was not shallow and covered with sediment! It was in fact deep and scored everywhere with canyons, trenches, etc. This was indeed a surprising and exciting discovery.

In the 1950’s oceanographers found the largest and most extensive mountain range on Earth, in the middle of the Atlantic Ocean. The mountain range, known as the Mid-Atlantic Ridge, was very interesting, being that it seemed to run exactly down the middle of the ocean and had a large canyon running down the middle of it. In the 1960’s, core samples showed that the seafloor was young at the ridge and got progressively older with distance away from the ridge. Harry Hess considered this and came to the conclusion that new crust was being formed at the ridge and was being pushed away from it as new crust came along behind it. The process became known as seafloor spreading.

It was later discovered that where the oceanic crust met continental crust, the oceanic crust subsided underneath the continental crust and sank into the interior of the planet. These were called subduction zones and their presence was able to explain where all the sediment had gone (back into the interior of the planet) and the youthful age of the seafloor (the older portion of seafloor currently being around 175 million years old at the Marianas Trench).

The term “Continental Drift” was then discarded, once it was realized that the entire crust moved and not just the continents. Various names were used to refer to the giant separate chunks of crust, including “Crustal Blocks,” and “Paving Stone.” In 1968, three American seismologists in the paper in the Journal of Geophysical Research called the chunks “Plates,” and coined the name for the science that we still use today, “Plate Tectonics.”

Finally it all made sense! Plate tectonics were the surface manifestation of convection currents in Earth’s mantle. This explained where all the ancient rocks on Earth’s surface went, that they were recycled back into the interior of the Earth. Plate tectonics gave answers to many questions in geology, and Earth made a lot more sense.

Plate tectonics are the surface manifestation of convection currents in Earth’s mantle. Convection involves upwellings and downwellings like in a boiling pot of water. Subduction zones are the downwellings in Earth’s convection system. Upwellings known as plumes are thought to exist, where hot material rises to the surface of the planet from the very hot interior. These plumes are thought to cause volcanism at the surface that are known as Large Igneous Provinces, such as the Shatsky Rise. We are out here today, continuing our journey of learning and understanding how our planet works. The data collected during this survey will hopefully shed light on what processes produced that Shatsky Rise, and if it was in fact a plume from Earth’s interior.

Note: Most of the information in this blog can be found in Bill Bryson's book, A Brief History of Nearly Everything

Monday, August 23, 2010

Anatomy of an Airgun

een paid as to how we do it. Therefor, today I will discuss the not so humble air gun.The gun pictured at right is not of our guns but of a single gun to give you an idea. We use an air gun array composed of 40 guns (similar to the one at right), 36 of which fire in tandem while 4 are left on standby. The total capacity of the array when operating at maximum is 6600 cubic inches of air. The remaining four guns are used in situations where we lose power to any of the other guns. However, there is a catch. The system cannot exceed 6600 cubic inches and each of the four standby guns weigh in at a hefty 180 cubic inches each. The largest guns are 360 cubic inches and the smallest are 60. So if a 60 goes out, we stop sending air to it, but if a 360 goes out we turn on two of the standby's to bring the volume back to 6600.

een paid as to how we do it. Therefor, today I will discuss the not so humble air gun.The gun pictured at right is not of our guns but of a single gun to give you an idea. We use an air gun array composed of 40 guns (similar to the one at right), 36 of which fire in tandem while 4 are left on standby. The total capacity of the array when operating at maximum is 6600 cubic inches of air. The remaining four guns are used in situations where we lose power to any of the other guns. However, there is a catch. The system cannot exceed 6600 cubic inches and each of the four standby guns weigh in at a hefty 180 cubic inches each. The largest guns are 360 cubic inches and the smallest are 60. So if a 60 goes out, we stop sending air to it, but if a 360 goes out we turn on two of the standby's to bring the volume back to 6600.

Migration in seismic data processing

Saturday, August 21, 2010

Marine Multi-channel Seismic Data Processing (part-1)

OK, let us get down to the technical business. What we are doing for processing the marine relection seismic data on the boat are following some workflows:

(1) SEG-D data input

When we get the raw shot data tape by tape from the recording system on the seismic boat, they are in SEG-D format and every .RAW file stored in the tape stands for one single shot gather (one shot point with 468 channels & traces). We probably get 3 tapes of raw shot gathers per day, which are about 18 GB each with maximum 1273 .RAW files in one single full tape. Even we have SEG-D data immediately when the boat is investigating, but we do not have much things to do until we finish the whole single seismic line, because we need the processed navigation file in .p190 format to set up the geometry for following processing work, and the .p190 files just can provided at least after completing one whole seismic line (sometimes after several short lines they process several .p190s together to save money on the use of lisence of the program). However, we still have things to do: we could take a first look at the raw shot gathers (ProMAX module: Trace Display), to find out the overall situation including direct wave path, reflected ray path including primaries and multiples, noise and bad channels; we can also figure out the main frequency range (PromMAX module: Interactive Spectral Analysis); and we could also make a near-trace plot by only using the near group of every shot to show the first glimpse of geology. We can have a first basic look at the major horizons like sediment layers, transition layers, volcanic layers or acoustic basement. Scientists are willing to see the seismic image as soon as possible. So the near-trace plot is a good thing to show them to release their desire in real time.

(picture below: near-trace plot of MGL1004 MCS line A)

(2) Set up Geometry

When we get the .p190 files for the seismic lines, we are ready to start the whole process flow. The first thing we need to do is to set up the geometry (ProMAX module: 2D Marine Geometry Spreadsheet). A lot of information we need to provide to ProMAX: group interval(12.5m), shot interval(50m), sail line azimuth, source depth(9m), streamer depth(9m), shot point number, source location (easting-X and northing-Y), field file ID, water depth, date, time, near channel number(468), far channel number(1), minmum offset, maximum offset, CDP interval(6.25m), full fold number(59), etc. Anyway, a lot! It takes some time to fill out the sheet and we need to be careful to make sure all the information matching up together. Sometimes it will be tricky, so double check, even triple check!

(3) Load Geometry

When the geometry set-up or the Spreadsheet is done, we can load the geometry in the raw shot data (ProMAX module: Inline Geometry Header Load). It takes time! Remember every tape is 18 GB. It will take almost one hour for loading one tape in (maybe faster using better workstation). After loading all the tapes or all the raw shot SEG-D files in the ProMAX with the geometry, they are ready to go to the real part of processing. Hold on for a second. I say "real" here, because I mean we are starting to polish the raw data, i.e., where change happens!

(picture on the left: ProMAX geometry assignment map)

(picture on the left: ProMAX geometry assignment map)To Be Continued, next week, part-2!

Friday, August 20, 2010

Saving 1,522 lives on the Titanic with technology from the Langseth: fact or fiction?

My girlfriend once asked me a question a while ago, "why do you study ancient volcanism?" I must admit I found it a little difficult communicating in clear and simple terms the motive behind what I do. " I want to know why Earth works the way it does," I remember explaining. I also tried justifying my interest in using computers to investigate the earth: " You know, developments in existing seismic methods borrow from the fields of mathematics and medical imaging. Who knows, methods developed in this research may someday be used in other fields." I still convince myself that this is true. In reality, most scientists just love asking "why?" and sometimes we get amazing answers that lead to enormous technological benefits, most of which were not planned in the first place. This is a story of how technology in use on the Langseth inherits a lot from the curiosity and dedication of scientists who asked "why?" Oh! and how things may have been different on the Titanic with these technologies.

My girlfriend once asked me a question a while ago, "why do you study ancient volcanism?" I must admit I found it a little difficult communicating in clear and simple terms the motive behind what I do. " I want to know why Earth works the way it does," I remember explaining. I also tried justifying my interest in using computers to investigate the earth: " You know, developments in existing seismic methods borrow from the fields of mathematics and medical imaging. Who knows, methods developed in this research may someday be used in other fields." I still convince myself that this is true. In reality, most scientists just love asking "why?" and sometimes we get amazing answers that lead to enormous technological benefits, most of which were not planned in the first place. This is a story of how technology in use on the Langseth inherits a lot from the curiosity and dedication of scientists who asked "why?" Oh! and how things may have been different on the Titanic with these technologies. It was Leonardo da Vinci, as early as 1490, who first observed : “If you cause your ship to stop and place the head of a long tube in the water and place the outer extremity to your ear, you will hear ships at a great distance from you.” So pioneering the basis of acoustic methods. Duayne and Kai have shown how the Langseth conducts seismic experiments with sound sources. Acoustic methods are also used by marine mammal observers (MMOs) to listen to aquatic life. With the sound sources, accurate positioning made available by GPS, and the theory of sound, we can image Earth's interior. See the connection? Curiosity encapsulated in scientific endevour is the seed of technology. With this technology we can do better science, and also we reap enormous social benefits.

It was Leonardo da Vinci, as early as 1490, who first observed : “If you cause your ship to stop and place the head of a long tube in the water and place the outer extremity to your ear, you will hear ships at a great distance from you.” So pioneering the basis of acoustic methods. Duayne and Kai have shown how the Langseth conducts seismic experiments with sound sources. Acoustic methods are also used by marine mammal observers (MMOs) to listen to aquatic life. With the sound sources, accurate positioning made available by GPS, and the theory of sound, we can image Earth's interior. See the connection? Curiosity encapsulated in scientific endevour is the seed of technology. With this technology we can do better science, and also we reap enormous social benefits.Thursday, August 19, 2010

Crew Profiles

1. Name/Title

1. Name/Title-David Ng/Systems Analyst/Programmer

2. Favorite food/music/operating system

-Lobster/Rap and R&B/Ubuntu

3. You're stuck on a deserted island with only one person from this boat, who would you choose and why?

-Mik

e Duffy because he can cook

e Duffy because he can cook

1. Name/Title

-Robert Steinhaus/Chief Science Officer

2. Favorite food/music/science

-Mac & Cheese/Classic Rock/ Marine Seismic

3. You're stuck on a deserted island with only one person from this boat, who would you choose and why?

-Captai

n Landow because people would look for him

n Landow because people would look for him1. Name/Title

-Sir David Martinson/Chief Navigation

2. Favorite food/music/port

-Steak/Jazz/Aberdeen, Scotland

3. You're stuck on a deserted island with only one person from this boat, who would you choose and why?

-Pe

te (1st Engineer) because he can build and fix anything

te (1st Engineer) because he can build and fix anything1. Na

me/Title

me/Title-Bern McKiernan/Chief Acquisition

2. Favorite food/music/tool

-Animal/Mosh-pit music/Leatherman

3. You're stuck on a deserted island with only one person from this boat, who would you choose and why?

-Jason

(Boatswain) because he's fun and handy with a small boat

(Boatswain) because he's fun and handy with a small boatMore to come next week!!!!!

Seismic Refraction and Reflection

Today I am going to explain the nature of seismic reflection and refraction and then briefly how we use it to extract information pertaining to the structure of Earth's subsurface. Let me start of with explaining what seismic refraction is.

The speed at which a seismic wave travels through a particular material is strongly dependent on the density and elastic properties of that material. So seismic waves travel at different speeds through materials with different properties. Generally, the denser the material, the faster seismic waves travel through it. When a seismic wave travels from one material into another, it does not continue in the same direction, but will bend. This bending of the seismic wave path is known as seismic refraction. Seismic refraction is caused by the difference in seismic wave speed between the two materials and is characterized by Snell’s Law, which is illustrated in the figure to the right. Given an angle of incidence (the angle between approaching seismic wave path and the line perpendicular to the interface) and the seismic wave speed in each material, Snell’s Law dictates the angle of refraction (the angle between the departing seismic wave path the line perpendicular to the interface).

All waves(light, sound, etc.) undergo refraction when moving from one material to another. A good everyday example of refraction is when you look at a straw in a glass of water and the straw seems to bend where it enters the water. Well the straw is not actually bending but the path of the light traveling to your eyes from the submerged straw bends slightly as it enters the air from the water. This is because light travels at slightly different speeds through water and through air. It is this refraction (bending) of the path of the light from water to air that makes it appear that the straw is bent.

Now that we know what seismic refraction is, what is seismic reflection? The answer is that seismic reflection is a type of seismic refraction! When a seismic wave is travelling from a material with a lower seismic wave speed to a material with a higher seismic wave speed, the angle of refraction is larger than the angle of incidence. In this case, there exists an angle of incidence, where the angle of refraction is 90 degrees and the refracted seismic wave runs parallel to the interface between the two materials. The angle of incidence at which this occurs is known as the critical angle. When the angle of incidence is larger than the critical angle, the seismic wave is refracted back into material 1 and leaves the interface at the same angle as the incident seismic wave approached it. This post-critical refraction is call total internal reflection. These phenomena are illustrated in the figure just above, where the red wave path is critically refracted and the yellow wave path is reflected.

So how do we use seismic reflection and refraction to extract information about the structure of Earth’s subsurface? In general the density of material increases with depth in Earth, therefore the seismic wave speed increases with depth. As seismic waves travel down through the subsurface, they will see larger and larger velocity materials the deeper they go and according to Snell’s Law, will refract and reflect back up to the surface, where we can record them. Well by using controlled seismic sources (like the airguns described in Kai’s post) we can send seismic waves into Earth’s subsurface and record the reflections and refractions when the arrive. By measuring when these refractions and reflections arrive, we can determine where the interfaces between differing materials are in the subsurface, thus generating a cross-sectional view of the subsurface.

Wednesday, August 18, 2010

Arrow of time, thermodynamics, and another transit to Yokohama

Now, why am I talking about this? Isn't this blog supposed to be about the Shatsky Rise cruise? OK, the reason is that we had another medical emergency, and we're currently heading to Yokohama again (this time, fortunately, no death is involved). Having two medical diversions in one cruise is pretty unusual. Will Sager, who has much longer sea-going experiences than I, told me that he has been on ~40 cruises over 33 years and had never had bad luck to this degree before. This cruise is only my 10th cruise, and this is actually my very first cruise as a chief scientist, and look what an experience I'm having! But if you think about the above thermodynamical improbability, the likelihood of having two medical diversions in one cruise may not be so small… Or I should think the other way around... Having similarly bad luck in future would be even less likely, so my future cruises may go entirely trouble-free!? Maybe it's time to start writing another sea-going proposal.

NSF has been very sympathetic to our situation, and because no further extension is possible this year (we need to get back to Honolulu by September 14th to give OBS to another cruise), they agreed to have another Shatsky Rise cruise sometime during late 2011 to early 2012 to finish any unaccomplished portion of our planned seismic survey. The science party is all grateful for this thoughtful decision.

Saturday, August 14, 2010

There, in the water! A shark! A torpedo! A maggie?

Ah yes. The magnetometer or as we call it, Maggie. The magnetometer is a useful tool that has been around for over a century. A magnetometer is a scientific instrument used to measure the strength and/or direction of the magnetic field in the vicinity of the instrument. Magnetism varies from place to place and differences in Earth's magnetic field(the magnetosphere) can be caused by the differing nature of rocks and the interaction between charged particles from the Sun and the magnetosphere of a planet. Magnetometers are a frequent component instrument on spacecraft that explore planets or in our case a sea going vessel.

Ah yes. The magnetometer or as we call it, Maggie. The magnetometer is a useful tool that has been around for over a century. A magnetometer is a scientific instrument used to measure the strength and/or direction of the magnetic field in the vicinity of the instrument. Magnetism varies from place to place and differences in Earth's magnetic field(the magnetosphere) can be caused by the differing nature of rocks and the interaction between charged particles from the Sun and the magnetosphere of a planet. Magnetometers are a frequent component instrument on spacecraft that explore planets or in our case a sea going vessel.To keep it simplified: as the liquid metal core moves around the solid inner c

ore, it creates a magnetic bipole or a North and South pole. The strength of this field would be continuous throughout the system if the Earth were a homogeneous structure. However, the Earth is a hetergeneous system and as such has effects on the magnetic field. The magnetometer allows us to detect the differences in the magnetic field created by changes within the structures of the Earth. Thus, a highly ferrous rock will have a greater effect on the surrounding magnetic field than a non-ferrous rock.

ore, it creates a magnetic bipole or a North and South pole. The strength of this field would be continuous throughout the system if the Earth were a homogeneous structure. However, the Earth is a hetergeneous system and as such has effects on the magnetic field. The magnetometer allows us to detect the differences in the magnetic field created by changes within the structures of the Earth. Thus, a highly ferrous rock will have a greater effect on the surrounding magnetic field than a non-ferrous rock.Magnetometers claim to fame: They discovered that parts of the seafloor were polarized one direction and parts were polarized in the other. Duayne has already covered seafloor spreading and magnetism so read his post for that. Without magnetometers the polar reversals would not have been discovered.

When we first begin deploying seismic equipment, Maggie is put in the water first. It is towed behind the boat about 150m back and at about a depth of about 40m. Maggie is giving us updates constantly so we can track changes in the magnetic strength as we travel across the survey area. Using the data we collect we can build a very accurate magnetic profile of Shatsky Rise when we have completed the survey.

Although the Maggie is a humble looking piece of equipment, we are glad to have it and can thank its predecessors for helping solidify plate tectonics as an accepted theory of Earth's evolution.

Something about the air guns source

Friday, August 13, 2010

Quiz: How many iPods does it take to store reflection seismic data?

Tuesday, August 10, 2010

Streamer out----let's get the multi-channel reflection started

Sunday, August 8, 2010

Did Somebody Say XBT Party?